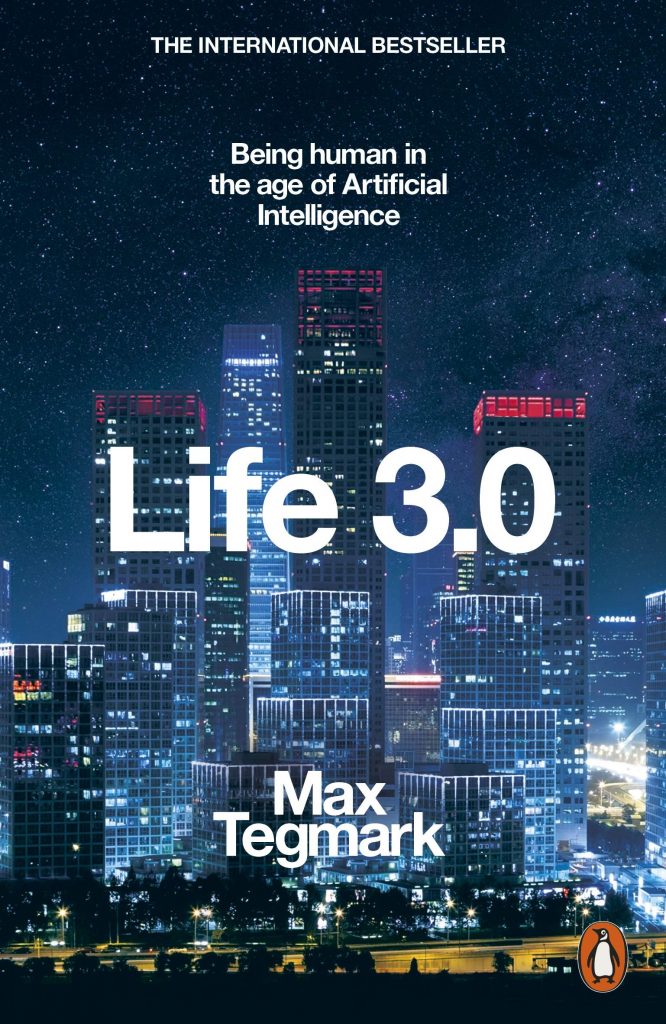

In the highly informative, yet accessible book “Life 3.0”, author Max Tegmark presents the developments, challenges and possibilities of superhuman AI, explaining the topics which form the basis of the discussion, while shedding light on prevalent misconceptions in the field. The reader is challenged with clever insights and difficult problems about laws, ethics, society and meaning and more fundamental questions on intelligence, life and consciousness, much of it grounded in a physics perspective.

The world of Artificial Intelligence (AI) presently generates a lot of excitement along with fears and concerns due to its potential to disrupt and turn over on its head, the structure of society as we know it. Some of these concerns are largely misguided and misinformed (i.e., killer robots) while simultaneously some potential risks are underestimated or ignored. The term “Narrow intelligence” is defined as the ability to accomplish a narrow set of goals such as driving a car or playing chess. This is contrasted with “General Intelligence” which is the ability to accomplish any goal, including learning. Hence in this discussion, the focus is on Artificial General Intelligence- AGI.

The author’s thesis is that the inevitability of even human-level AGI is not guaranteed and that there are multiple possible approaches to achieving superhuman AI, each facilitating different outcomes. But regardless of how the future turns out to be, AI safety research would be vital to avoid the misalignment of the goals of AI with the goals of the general public. Henceforth this article would introduce you to some of the key ideas he expresses throughout the book.

Life X.0

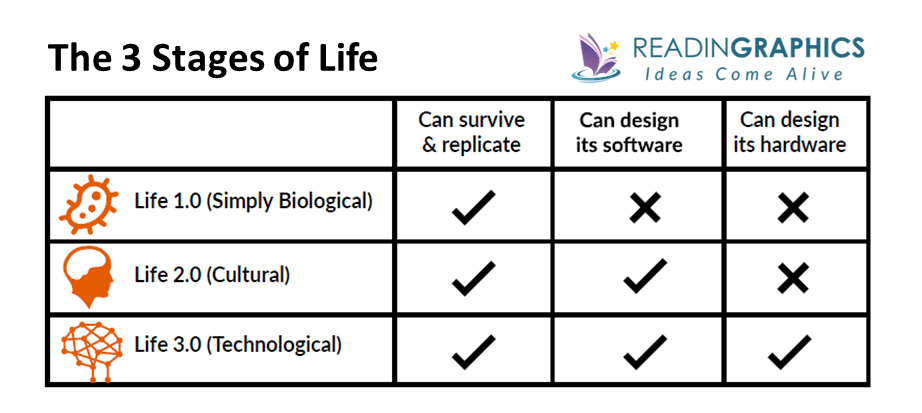

In Life 3.0, three stages of life are defined based on their complexity. Namely, biological evolution (1.0), cultural evolution (2.0) and technological evolution (3.0). Humans and animals fall under life 2.0, being able to acquire knowledge apart from what they’re born with. In the following image, hardware refers to the physical bodies of the life form and software are the algorithms that are behind the abilities that we learn; to walk, read, write, calculate, recognize faces and laugh.

It is speculated that Life 3.0 may arrive within this century, made possible by advancements in AGI. There are 3 main camps in this controversy: techno skeptics, digital utopians and the beneficial-AI movement. Techno-skeptics believe that building AGI is so hard that it won’t happen for hundreds of years. However digital utopians view it as likely this century and that it should be embraced as the natural and desirable next step in the evolution of life. The beneficial-AI movement also views it as a possibility this century, but also that a good outcome needs to be ensured by AI-safety research.

Learn about Learning

Early on, the author fixates on some topics that are fundamental in developing AI and which are central to the later discussions as well. Specifically, on answering the question “What is intelligence?” and similarly for intelligence, memory, computation and learning. He ventures ahead with a definition of intelligence as “The ability to accomplish complex goals”. The notion of “learning” can be extended to any physical system in general, and this is tightly bound to computation and memory. Simply by obeying the laws of physics, matter can “learn” by arranging itself to get better and better at computing the desired function. Hence neural networks are considered a powerful substrate for learning since they rearrange in order to get better and better at implementing desired computations.

Jobs, Justice and Generations

Near-term progress in AI has the potential to greatly improve our lives, particularly through making our personal lives, power grids and financial tasks more efficient and even saving our lives with self-driving cars and AI-powered medical diagnoses. That would require allowing real-world systems to be controlled by AI, hence it is crucial that we make AI more robust, doing what we want it to do. This need for robustness is paramount in fields such as law and justice – for instance, “robojudges” that function by neural networks trained on existing data may give a verdict but the explicit reasons may not be available since the workings of neural networks are not understood even though a result is generated. The same goes with AI-controlled weapons systems.

There is the worry that AI may replace humans as the masters of the earth and eventually spread across the universe, but long before that, we must deal with intelligent machines replacing us on the job market. The author points out that this is necessarily not a bad thing, as long as society redistributes some of the AI-generated wealth to make everyone better off. With advance planning, a low- employment society may be viable with people achieving their sense of purpose from activities other than jobs.

How will the future play out?

There are many fascinating aftermath scenarios possible in thousands of years, facilitated by the rise of AGI. Superintelligence may live peacefully and coexist with humans because it’s forced to (enslaved god AI) or simply because it’s friendly. That could be either an AI-powered- liberation utopia, protector god AI, benevolent dictator or a “zookeeper” AI which comes to view humans as we currently view animals.

Superintelligence may also be prevented by humans by a lack of incentive to build it, or deliberately forgetting the technology in order to revert back to a simpler time or even by the rise of a surveillance state like one that is described in George Orwell’s “1984”. On an even darker note, humans could go extinct and become replaced by AIs that choose to conquer us or by humans themselves, that consider AI as their descendants, hence choosing not to reproduce.

Goals and how to view them

Chapter 7 deals with the definition of goals and it is cleverly explained in terms of the major fields of study that we engage in. Namely, Physics: The origin of goals, Biology: The evolution of goals, Psychology: The pursuit and rebellion against goals, Engineering: Outsourcing goals, and Ethics: Choosing goals. Fascinatingly, the origin of goal-oriented behavior lies in the laws of physics. Entropy, which is a measure of disorder, increases as energy is dissipated. Life is a phenomenon that increases this disorder or dissipates energy by growing its complexity and replicating. Hence Darwinian evolution shifts the goal from dissipation to replication, suggesting that when life evolves or becomes more skilled at reproducing, it ultimately facilitates a greater dissipation of energy.

Since we’re building increasingly intelligent machines to exhibit goal-oriented behavior, we must strive to align machine goals with ours. There are many principles that humans have agreed that must be implemented in AI, but how to do so is still an open problem.

The C- Word

The hotly debated topic of consciousness pops up in the field of AI as well. The question of whether AIs are conscious, leads to several uncomfortable ethical and philosophical problems: Can AIs suffer? Should they have rights? We’re still in disagreement on what exactly is consciousness and so in this book, it’s defined broadly as “subjective experience”. That is, if it feels like something to be you right now, then you’re conscious.

A physics perspective can be used to approach the problem of whether anything is conscious. If some arrangements of particles are conscious – for instance, our brains – then what arrangements aren’t? The exact physical properties to distinguish between conscious and unconscious systems are still unknown, but it is an easier problem to solve than figuring out why anything is conscious. Generalizing consciousness predictions from brains to machines requires a theory of consciousness, and that is still not achieved.

Image Courtesies

- Featured Image: https://bit.ly/3IPr3JB

- Content Image 1: https://bit.ly/3aRIAEj

- Content image 2: https://bit.ly/3J3o40h

- Content Image 3: https://bit.ly/3OlPAH4

References

Tegmark, M., Paalman, W. and Waa, F., 2018. Life 3.0. Amsterdam: Maven Publishing.